Automatic evaluation is necessary, but not sufficient for a high-quality AI.

Automated evaluation now allows teams to move much faster, but it's important to note that it can't fully replace human judgment.

Human annotation is still essential to validate responses and ensure they work well in real-world scenarios.

.

What we built

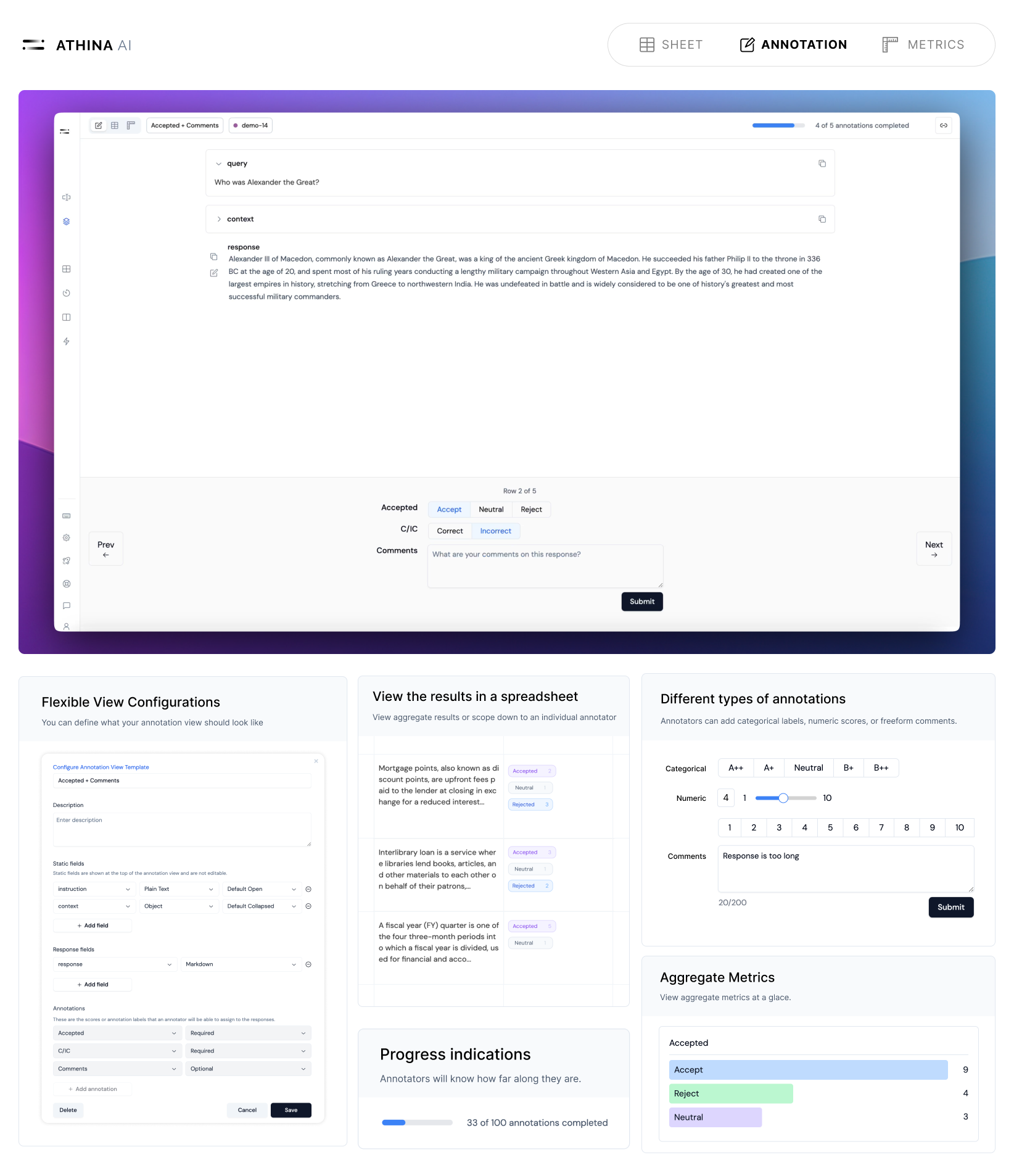

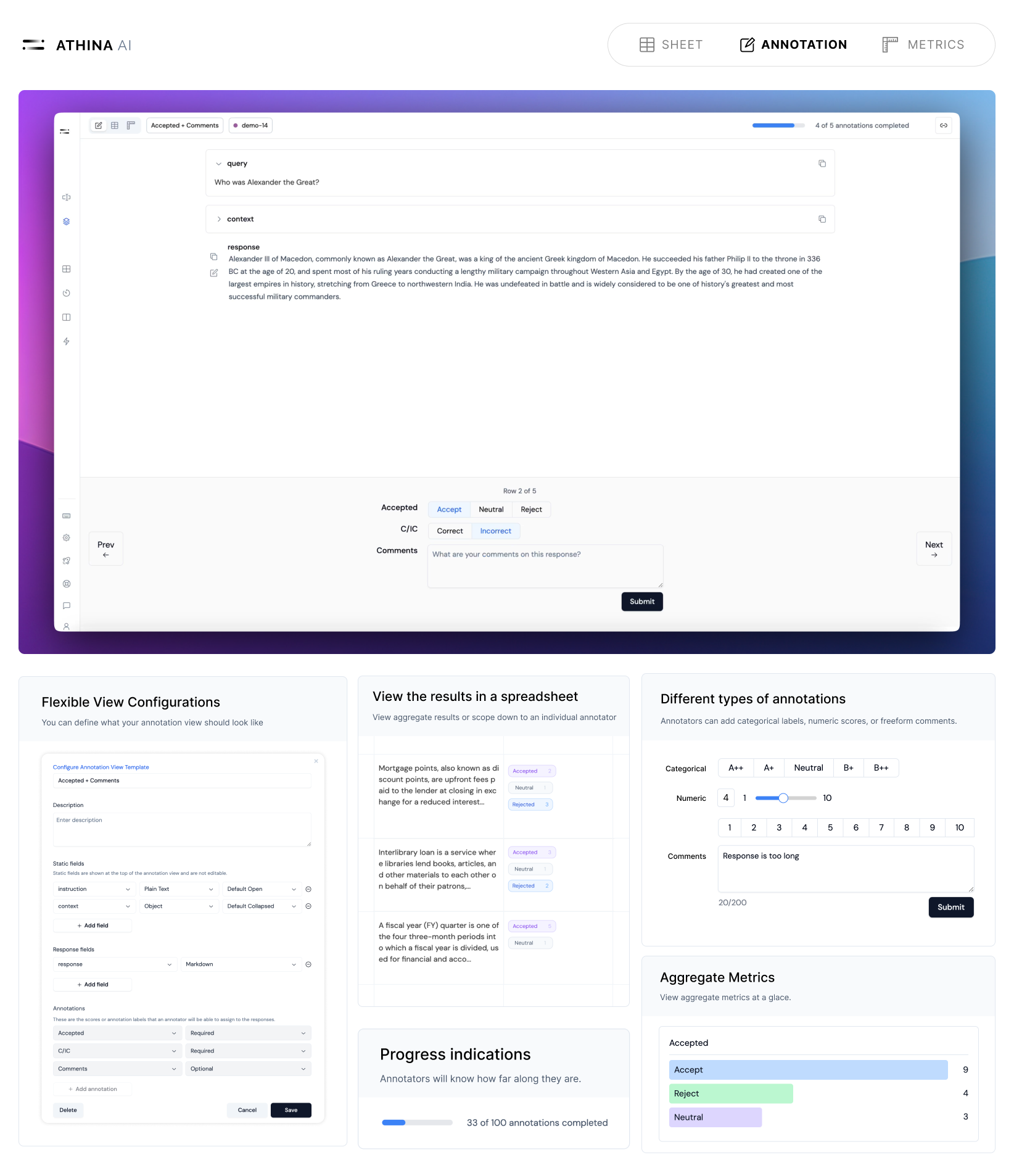

Annotation mode is a flexible UI within Athina IDE that lets your team collaborate to annotate datasets rapidly.

-

Support for Multiple Annotators: Multiple annotators can add scores and labels to the same dataset. You can view aggregate scores, or the scores by an individual annotator.

-

Flexible Annotation Views: You can configure an annotation view with exactly the fields you need, in the format you need them.

-

Side-by-side viewing option: We added support for a side-by-side view mode, so annotators can easily compare 2 responses.

-

Flexible Scoring: Annotation mode supports both categorical and numeric scoring of LLM responses to provide more flexibility in how responses are evaluated.

-

Freeform Comments: Annotators can leave detailed comments alongside numeric or categorical scores.

-

Response Editing: Annotators can edit LLM responses directly in annotation mode, making it easier to refine outputs in a dataset.

-

Multiple Viewing Modes: You can view the annotation scores and comments in two ways:

- Spreadsheet UI: A table-like view for easy navigation of annotations.

- Metrics View: A visual representation of the scores and labels.

.

We're super excited about this because teams can now manage all their datasets, evaluations, and annotations in a centralized place, streamlining the workflow and improving collaboration.